AI Code Review vs Manual PRs: Why the ROI Is Too Big to Ignore

AI-powered tools outperform senior engineers in PRs, cut costs, and future-proof your software delivery.

Code review has always been the gatekeeper of software quality. Traditionally, this job sat firmly on the shoulders of senior engineers, painstakingly combing through pull requests (PRs) or merge requests (MRs). While their wisdom is invaluable, manual, asynchronous reviews are slow, inconsistent, and often cluttered with trivial nitpicks. Enter AI-powered review tools: faster, sharper, and when paired with XP practices often better.

In this article, I’ll break down where AI tools shine, where humans still matter today, and how practices like TDD, ADRs, and small commits make even those gaps shrink to irrelevance.

The AI-empowered toolbox

Let’s start with some of the players changing the review game:

CodeRabbit – Instant IDE feedback, remembers past team preferences, reduces trivial back-and-forth.

Qodo (formerly CodiumAI) – Generates tests, performs risk analysis on diffs, standardizes review quality.

Diffblue – Specializes in auto-generating Java unit tests, perfect for legacy systems drowning in untested code.

AI Code Review Plugin (JetBrains) – Embeds LLM-powered review suggestions directly in IntelliJ-based IDEs.

Bugdar – AI-enhanced security code review, especially useful for spotting vulnerabilities in PRs.

Each of these tools offers something manual review simply cannot: speed, consistency, and scalability. They never tire, they never forget, and they don’t waste time nitpicking semicolons. And importantly… they are far stronger at detecting security issues than human reviewers. Fatigue, bias, or oversight can let vulnerabilities slip through in manual PRs. AI security tooling, by contrast, runs exhaustive pattern matching, anomaly detection, and vulnerability scanning without ever losing focus.

ROI: The brutal numbers

Manual asynchronous reviews doubtfully still add value:

A senior engineer spends 30–60 minutes reviewing a single PR, often filled with style comments or missed edge cases.

An AI-powered tool delivers near-instant feedback in the IDE, before the PR is even created.

How much value is still to be found in asynchronous manual reviews by means of PRs? Seriously.

That’s not a 10% gain. That’s a huge change. Multiply across dozens of PRs per week and you’re saving entire engineer-weeks every sprint. For an organization, that translates into faster delivery, reduced cost per feature, fewer production issues, and significantly lower security risks.

This point is backed by Dragan Stepanović’s presentation Async Code Reviews Are Choking Your Company’s Throughput, which demonstrates how asynchronous reviews slow teams down dramatically. AI-powered review tools counter this by providing near-synchronous feedback directly in the IDE, reducing delays and bottlenecks while amplifying throughput.

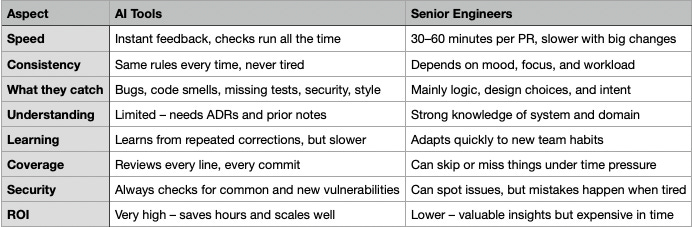

Comparison: AI vs Senior Engineers

Here’s a simpler side‑by‑side view:

But aren’t seniors better?

Yes and no.

Where humans still beat AI (today):

Architectural trade-offs – Big-picture design decisions, weighing business constraints, anticipating long-term consequences.

Domain logic – Subtle business rules that aren’t obvious from the code.

Context awareness – Knowing when an ADR is outdated and when a new decision should be made.

Where AI already outperforms:

Breadth – It checks for bugs, smells, security issues, and style consistently across the board.

Speed – Immediate feedback at commit or PR creation.

Learning – Adapts to recurring feedback, reducing duplicate comments.

Coverage – Never misses a rule, never skips a line out of fatigue.

Security – Catches vulnerabilities before humans even notice them, from SQL injection risks to unsafe deserialization patterns.

Now, here’s the kicker: the human advantage shrinks dramatically when teams adopt strong XP practices.

XP Practices: Levelling the field

XP (Extreme Programming) practices don’t just make development smoother—they also systematically shrink or eliminate the areas where humans are still stronger than AI today. Here’s how:

Always push small changes

Small, incremental commits mean there are fewer architectural trade-offs hidden in a single PR. AI reviewers can easily check for local correctness and consistency without needing global architectural vision.

Limits human edge on architectural trade-offs: Instead of debating architecture in giant PRs, decisions are documented once in ADR.md (see below) and then enforced incrementally by AI.

Benefit for organizations: Fewer merge conflicts, faster integration, reduced cognitive load, and less reliance on senior intuition for every small change.

Example: A 50-line PR introducing a single refactor is reviewed instantly by CodeRabbit. No need for a senior to weigh in on the overall architecture because the ADR already locked the decision in.

Test-Driven Development (TDD)

With TDD, code correctness is proven by tests before the code is even complete. AI tools like Diffblue can amplify this by generating additional unit tests and ensuring coverage.

Eliminates human advantage on domain logic: Business rules that used to require a senior’s deep understanding are codified in tests. If a future change breaks a rule, tests and AI reviews flag it immediately.

Benefit for organizations: Fewer production bugs, lower defect costs, and less dependency on senior engineers to catch subtle domain mismatches.

Example: In an e-commerce platform, discount calculation logic is fully tested. A new change that breaks the calculation is caught by TDD + AI test generation, not by a tired reviewer.

ADR.md files in the codebase (ADR - Architectural Decision Records)

ADRs make architectural and strategic decisions explicit and version-controlled alongside code. AI can parse them and understand the “why” behind technical choices.

Neutralizes human advantage on context awareness: Instead of relying on a senior’s memory, the rationale is in the repo, and AI reviewers stop suggesting alternatives already dismissed.

Benefit for organizations: Transparency, faster onboarding of new engineers, and fewer repetitive debates.

Example: An ADR explains why Event Sourcing was chosen. AI reviewers align checks with that decision, preventing repeated questioning and wasted senior time.

Together, these practices transform the areas where humans “still beat AI” into documented, testable, and automatable processes. Seniors become system guides and mentors, while AI enforces decisions and consistency in the day-to-day code flow.

Examples in practice

Case 1: Legacy Java monolith

Developer writes a new feature with TDD.

Diffblue auto-generates extra unit tests for untouched classes.

CodeRabbit reviews changes in the IDE, flags a missing null check.

ADR.md explains why the team is using a custom serializer instead of Jackson.

Qodo confirms PR consistency, Bugdar scans for vulnerabilities.

Organizational benefit: Reduced time-to-merge from 3 days to 1 day, higher test coverage, and lowered risk of production bugs.

Case 2: Human-only review failure

A senior misses a subtle security vulnerability in a 1,200-line PR due to fatigue.

Vulnerability leaks into production, forcing an emergency hotfix and downtime.

Organizational cost: Lost customer trust, firefighting costs, and delayed roadmap delivery.

With Bugdar running, the issue would have been flagged before merge.

Case 3: Style debates wasting time

Two engineers argue in a PR about naming conventions for three days.

Project velocity slows, frustration grows, and the feature is delayed.

Organizational cost: Slower delivery, wasted senior talent, and morale damage.

With Qodo enforcing team rules automatically, the debate never happens.

Case 4: Missed performance bottleneck

A manual reviewer approves a PR that introduces an inefficient query.

The issue only surfaces under load in production, causing latency and customer complaints.

Organizational cost: Poor user experience and rushed remediation work.

AI review linked with observability tools would flag the risky query pattern at review time.

Case 5: Onboarding a new developer

A junior developer submits PRs that don’t align with unwritten team rules.

Seniors spend weeks correcting style and logic issues in reviews.

Organizational cost: Slow onboarding, senior time wasted.

With AI reviewers enforcing ADRs and norms, the new developer aligns with expectations from day one.

The future of review

Manual reviews are on their way out. AI-empowered systems are already improving rapidly on the very areas where humans still have an edge:

Architectural trade-offs: AI models are starting to analyze ADR.md files and broader code context, learning how decisions evolve. Soon, they’ll suggest when to update an ADR or even draft one.

Domain logic: With better integration into test suites and production telemetry, AI can spot logic mismatches and business rule violations. Example: detecting when discount logic breaks after a change, by comparing code against existing test scenarios.

Context awareness: Continuous learning systems adapt to organizational patterns faster. Example: CodeRabbit already remembers past team feedback; imagine it automatically aligning with evolving coding standards across hundreds of repos without human prompting.

AI-driven observability feedback: As observability platforms (metrics, logs, traces) become AI-empowered, they can feed insights back into review tools. Example: if monitoring shows a memory leak tied to a module, the AI reviewer flags related code in the next PR and suggests preventative changes. This loop closes the gap on domain and context awareness by grounding reviews in real runtime behavior.

Security reinforcement: With AI continuously correlating review patterns, observability data, and vulnerability databases, risks are spotted earlier and fixed faster than manual review ever could.

The trajectory is clear: what required human intuition yesterday will be automated tomorrow. Manual reviews will gradually disappear, not because seniors aren’t valuable, but because their focus will shift to higher-order problems: system strategy, business alignment, and mentoring.

Conclusion

The ROI of AI-powered code review is undeniable:

Faster feedback loops

Cleaner PRs

Massive reduction in wasted senior engineer hours

Higher consistency across teams

Stronger, earlier security detection

Combine AI tools like CodeRabbit, Qodo, Diffblue, JetBrains AI Review, and Bugdar with XP practices (small changes, TDD, ADRs), and you’ll find that senior engineers no longer need to sweat the small stuff. The machines have it covered.

The result? More velocity, higher quality, stronger security, and happier seniors who get to focus on what really matters.

And the writing is on the wall: as AI continues to close the gap on architecture, domain logic, context, and security, manual PRs and MRs will disappear entirely.

Final thought: If your team is still relying only on manual PRs, you’re not protecting quality, you’re burning money. AI review tools are here, they’re better at the boring parts, and the ROI is too massive to ignore.